The "USB-C Moment" for AI: Why MCP is Murdering Integration Hell

Written by Joseph on January 2, 2026

Let’s be honest. Getting AI to work with your own data feels less like engineering and more like being a full-time plumber for a leaky brain. You spend days crafting custom functions so GPT-5.2 can query your database. It works! Then your team says, “Let’s try Claude 4.5.”

Boom.

Half your code turns into digital confetti because Anthropic’s tool-calling schema is different. You’re not building intelligence—you’re building brittle, one-off pipes that break with every model update.

This is the NxM Integration Nightmare. It’s “Dongle Hell” for your AI stack. Ten models × ten data sources = one hundred custom bridges to build and maintain. It’s exhausting, expensive, and locks you into vendors.

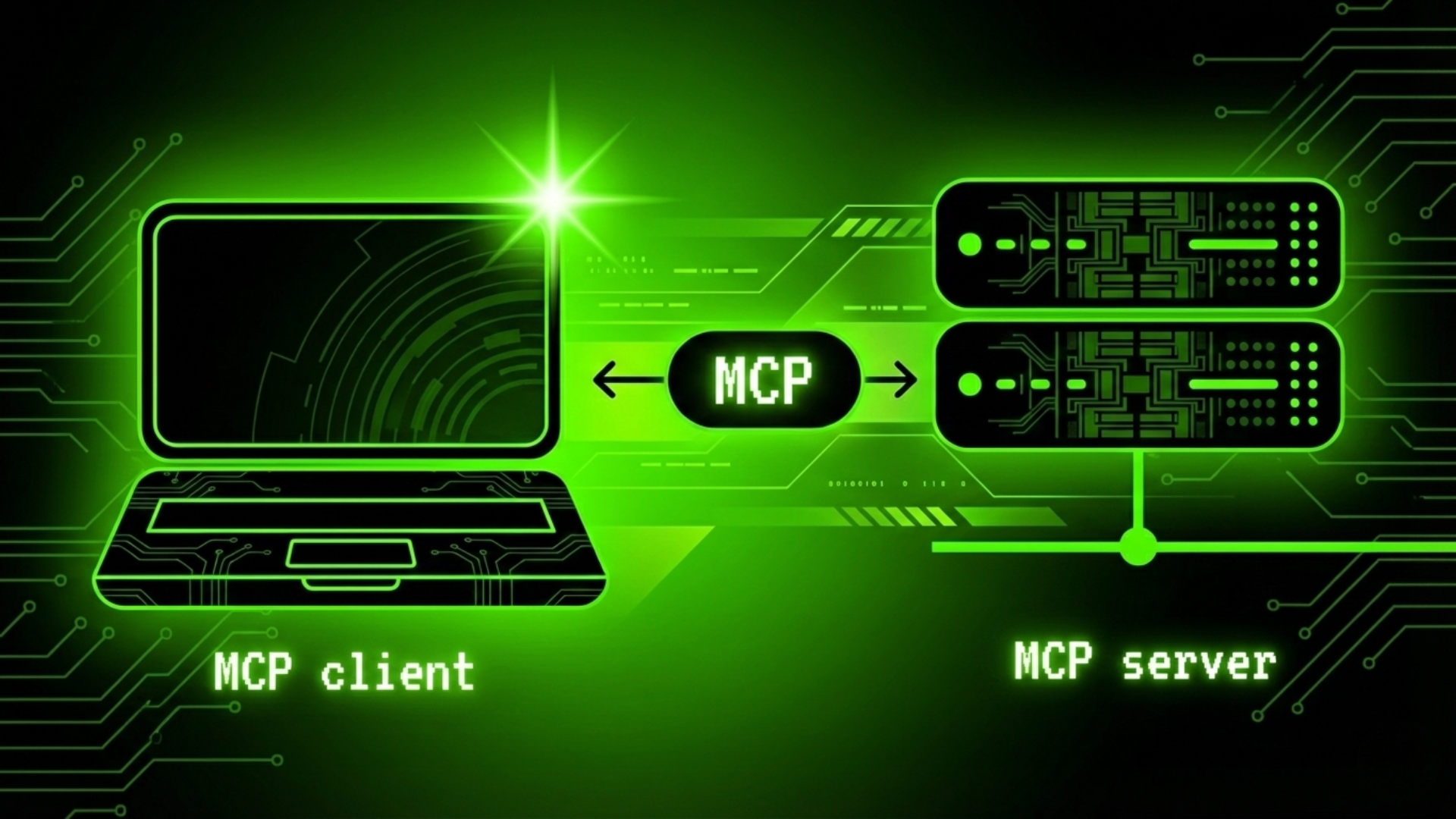

Enter the Model Context Protocol (MCP). This isn’t another API—it’s USB-C for artificial intelligence. You build one standard port for your data, and every AI model can plug in and just work. No adapters. No compatibility charts. Just one universal connector that finally makes AI tools interoperable.

The Magic: Your Data Speaks One Language

Here’s the revolutionary part: You don’t teach each AI your system. You teach your system to speak MCP.

The Server (Your Data’s Translator): This is the Python script you write once. It lives with your data—on your laptop, your company server, anywhere. It knows how to talk to your SQL database, internal API, or local files. Its only job is to translate between your systems and the MCP standard.

The Client (The Universal Adapter): This is what connects to the AI. It could be Claude Desktop, a custom app you’re building, or a script using OpenAI’s API. It speaks MCP to your server and the AI’s native language to the model.

The AI (The Brain): Any model that supports function calling—GPT-5, Claude 3.5, Gemini, Llama—can now use your tools. The client handles the translation.

You’re not building 100 bridges anymore. You’re building one universal dock (your MCP server) and a simple cable (the client) for each AI you use.

Build the Dock: A Production-Ready Data Server

In a real application, you separate concerns. Let’s build this properly with clean architecture.

File Structure

mcp_data_dock/├── .env # Configuration (DB path, etc.)├── database/│ ├── init.py # Database setup and seeding│ └── schema.sql # Pure SQL table definitions├── server.py # Clean MCP server (tools only)└── clients/ # Different AI connections ├── openai_client.py └── anthropic_client.py1. Database Setup (database/init.py)

First, let’s create and seed our database separately. The server shouldn’t care about initialization.

# database/init.pyimport asyncioimport aiosqliteimport os

DB_PATH = os.getenv("DATABASE_PATH", "company_data.db")

SAMPLE_DATA = [ (1, 'GeForce RTX 4090', 5, 'GPUs'), (2, 'MacBook Pro M3 Max', 12, 'Laptops'), (3, 'Mechanical Keyboard', 45, 'Peripherals'), (4, '32" 4K Monitor', 22, 'Peripherals'), (5, 'Radeon RX 7900 XTX', 8, 'GPUs'),]

async def init_database(): """Creates database schema and seeds initial data.""" # Create schema async with aiosqlite.connect(DB_PATH) as db: with open('database/schema.sql', 'r') as f: await db.executescript(f.read())

# Seed with sample data await db.executemany( "INSERT OR IGNORE INTO inventory VALUES (?, ?, ?, ?)", SAMPLE_DATA ) await db.commit()

print(f"✅ Database ready at {DB_PATH}") return DB_PATH

if __name__ == "__main__": asyncio.run(init_database())2. Database Schema (database/schema.sql)

-- database/schema.sqlCREATE TABLE IF NOT EXISTS inventory ( id INTEGER PRIMARY KEY, item_name TEXT NOT NULL, stock_level INTEGER, category TEXT);

CREATE INDEX IF NOT EXISTS idx_category ON inventory(category);3. The Clean MCP Server (server.py)

Now, the server focuses only on exposing tools, not database setup.

# server.pyimport osimport aiosqlitefrom mcp.server.fastmcp import FastMCP

mcp = FastMCP("Inventory Master")DB_PATH = os.getenv("DATABASE_PATH", "company_data.db")

@mcp.tool()async def query_inventory(sql_query: str) -> str: """ Executes SQL SELECT queries on the inventory database.

⚠️ DEMO ONLY: In production, validate queries or use parameterized methods to prevent SQL injection. """ async with aiosqlite.connect(DB_PATH) as db: db.row_factory = aiosqlite.Row async with db.execute(sql_query) as cursor: rows = await cursor.fetchall() if not rows: return "No results found." return str([dict(row) for row in rows])

@mcp.tool()async def check_stock_level(item_name: str) -> str: """Checks stock level for a specific item by name.""" async with aiosqlite.connect(DB_PATH) as db: async with db.execute( "SELECT stock_level FROM inventory WHERE item_name = ?", (item_name,) ) as cursor: result = await cursor.fetchone() return f"Stock level: {result[0]}" if result else "Item not found."

if __name__ == "__main__": # Server assumes database is already set up mcp.run()Run it once to set up:

# Initialize the databasepython database/init.py

# Start the MCP server (in another terminal)DATABASE_PATH=./company_data.db python server.pyBuild the Cables: Connect ANY LLM

Cable 1: OpenAI GPT-5.2 Cable (clients/openai_client.py)

# clients/openai_client.pyimport asyncioimport osfrom openai import AsyncOpenAIfrom mcp import ClientSession, StdioServerParametersfrom mcp.client import stdio

# Initialize OpenAIai_client = AsyncOpenAI(api_key=os.getenv("OPENAI_API_KEY"))

# Connect to our MCP serverserver_connection = StdioServerParameters( command="python", args=["server.py"], # Our clean server env={"DATABASE_PATH": "company_data.db"} # Pass config via env)

async def ask_ai(question: str): """Ask an AI a question using our MCP tools.""" async with stdio.stdio_server(server_connection) as (read, write): async with ClientSession(read, write) as session: await session.initialize()

# Discover available tools available_tools = await session.list_tools() print(f"🔌 Connected! Found {len(available_tools.tools)} tools")

# Ask GPT-5.2 response = await ai_client.chat.completions.create( model="gpt-5.2-pro", messages=[{"role": "user", "content": question}], tools=[{"type": "function", "function": { "name": t.name, "description": t.description, "parameters": t.inputSchema }} for t in available_tools.tools] )

ai_message = response.choices[0].message

if ai_message.tool_calls: for tool_call in ai_message.tool_calls: # Execute on our local server result = await session.call_tool( tool_call.function.name, arguments=tool_call.function.arguments )

# Get final answer with real data final_response = await ai_client.chat.completions.create( model="o3", messages=[ {"role": "user", "content": question}, ai_message, { "role": "tool", "tool_call_id": tool_call.id, "content": str(result.content) } ] )

print(f"📊 Server Data: {result.content[:100]}...") print(f"🤖 AI Analysis: {final_response.choices[0].message.content}")

# Run itasyncio.run(ask_ai("What's our total GPU stock and which item is lowest?"))Cable 2: Anthropic Claude Cable (clients/anthropic_client.py)

# clients/anthropic_client.pyimport asyncioimport osimport anthropicfrom mcp import ClientSession, StdioServerParametersfrom mcp.client import stdio

ai_client = anthropic.Anthropic(api_key=os.getenv("ANTHROPIC_API_KEY"))server_connection = StdioServerParameters( command="python", args=["server.py"], env={"DATABASE_PATH": "company_data.db"})

async def ask_claude(question: str): async with stdio.stdio_server(server_connection) as (read, write): async with ClientSession(read, write) as session: await session.initialize() tools = await session.list_tools() print(f"🔌 Connected! Found {len(tools.tools)} tools")

# Ask Claude response = ai_client.messages.create( model="claude-opus-4-5-20251101", max_tokens=1000, messages=[{"role": "user", "content": question}], tools=[{ "name": t.name, "description": t.description, "input_schema": t.inputSchema } for t in tools.tools] )

# Process tool calls messages = [{"role": "user", "content": question}] messages.append({"role": "assistant", "content": response.content})

for block in response.content: if block.type == "tool_use": # Execute on our local server result = await session.call_tool( block.name, arguments=block.input )

# Add tool result to conversation messages.append({ "role": "user", "content": [{ "type": "tool_result", "tool_use_id": block.id, "content": str(result.content) }] })

print(f"📊 Server Data: {str(result.content)[:100]}...")

# Get final answer with real data final_response = ai_client.messages.create( model="claude-opus-4-5-20251101", max_tokens=1000, messages=messages, tools=[{ "name": t.name, "description": t.description, "input_schema": t.inputSchema } for t in tools.tools] )

# Extract text response for block in final_response.content: if hasattr(block, "text"): print(f"🤖 AI Analysis: {block.text}")

asyncio.run(ask_claude("Do we need to reorder any GPUs soon?"))The Mind-Blowing Workflow

- You ask a simple question about inventory

- GPT-5/Claude reasons it needs data, chooses the right tool

- Your client sends the request to your local MCP server

- Your server queries your local database (no data leaves your machine!)

- The AI receives actual live data and gives you an intelligent answer

The magic: Your server.py never changes. Switch from GPT-5 to Claude by changing only the client cable. Your database setup is separate and version-controlled.

Real-World Magic That Doesn’t Suck

The Universal Enterprise Brain: Build one MCP server for your CRM. Marketing uses it via GPT-5, Sales via Claude, Finance via your custom app—all with identical, live data.

The Compliance Dream: Healthcare data stays in your secure network. AIs “visit” through MCP without ever copying raw data. Auditors will hug you.

Debugging Superpower: Connect production logs via MCP. Ask any AI: “Show me errors from users in Europe in the last hour.” The AI queries sanitized logs through your secure server.

The Secret Sauce: Context Management

Traditional AI integrations cram everything into the prompt: database schemas, API docs, examples. This “context bloat” makes AIs dumber and costs a fortune.

With MCP:

- The AI only sees tool names and simple descriptions

- It chooses what to use

- Your server does the heavy lifting locally

- Only the result goes back to the AI

This cuts token usage by up to 90% and lets AIs work with datasets that would never fit in their context window.

Your New Reality

- Build clean MCP servers (separate data logic from tools)

- Create client cables for each AI you use

- Watch as every AI in your organization suddenly speaks the same language

The integration nightmare is over. The universal standard is here. You’re no longer an AI plumber—you’re an AI architect who builds permanent docks, not temporary bridges.

Stop building one-off integrations. Build one clean dock. The cables are trivial, and they work with every AI that comes next—today, tomorrow, and next year.

Want to go deeper? Check out the official MCP specification and browse awesome-mcp for servers that connect to everything from GitHub to Google Calendar. The ecosystem is growing, and your dock will work with all of it.